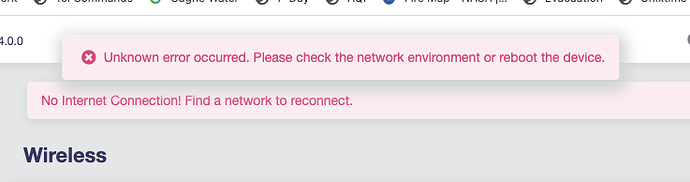

I’m seeing similar errors with mine sitting here on the bench.

Force-reloading the browser page does not help

BusyBox v1.33.1 (2022-06-03 08:21:49 UTC) built-in shell (ash)

_______ ________ __ ______ __

| |.-----.-----.-----.| | | |__| ___|__|

| - || _ | -__| || | | | | ___| |

|_______|| __|_____|__|__||________|__|__| |__|

|__| W I R E L E S S F R E E D O M

---------------------------------------------------

ApNos-349ddbc4-devel

OpenWrt 21.02-SNAPSHOT, r16273+114-378769b555

---------------------------------------------------

root@GL-AXT1800:~# uptime

21:42:50 up 5 days, 11:24, load average: 0.09, 0.04, 0.00

Connectivity is present as expected

root@GL-AXT1800:~# ping www.google.com

PING www.google.com (216.58.214.4): 56 data bytes

64 bytes from 216.58.214.4: seq=0 ttl=107 time=146.626 ms

64 bytes from 216.58.214.4: seq=1 ttl=107 time=147.743 ms

64 bytes from 216.58.214.4: seq=2 ttl=107 time=146.702 ms

^C

--- www.google.com ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 146.626/147.023/147.743 ms

2022-06-06 4.0.0 build

BUILD_ID="r16273+114-378769b555"

Chrome developer console shows repeatedly, roughly every 10 seconds:

app.75d8ac5e.js:25 POST https://192.168.8.1/rpc 500 (Internal Server Error)

(anonymous) @ app.75d8ac5e.js:25

t.exports @ app.75d8ac5e.js:25

t.exports @ app.75d8ac5e.js:23

Promise.then (async)

l.request @ app.75d8ac5e.js:9

l.<computed> @ app.75d8ac5e.js:9

(anonymous) @ app.75d8ac5e.js:9

(anonymous) @ app.75d8ac5e.js:32

n @ app.75d8ac5e.js:32

getSystemStatus @ VM6195:7

getSystemStatus @ VM6195:7

eval @ VM6195:7

setTimeout (async)

getSystemStatusTimeout @ VM6195:7

getSystemStatus @ VM6195:7

eval @ VM6195:7

setTimeout (async)

getSystemStatusTimeout @ VM6195:7

getSystemStatus @ VM6195:7

eval @ VM6195:7

setTimeout (async)

getSystemStatusTimeout @ VM6195:7

getSystemStatus @ VM6195:7

eval @ VM6195:7

setTimeout (async)

getSystemStatusTimeout @ VM6195:7

getSystemStatus @ VM6195:7

eval @ VM6195:7

21:08:58.751 app.75d8ac5e.js:15 Uncaught (in promise) Error: Request failed with status code 500

at t.exports (app.75d8ac5e.js:15:64567)

at t.exports (app.75d8ac5e.js:23:93725)

at o.onreadystatechange (app.75d8ac5e.js:25:102839)

Typical RPC payload when failing

{jsonrpc: "2.0", id: 141, method: "call",…}

id: 141

jsonrpc: "2.0"

method: "call"

params: ["JL7CRbzeZJdUsdJrlkQ9RPZo4rlhUxpC", "system", "get_status", {}]

The ["JL7CRbzeZJdUsdJrlkQ9RPZo4rlhUxpC", "wifi", "get_status", {}] call seems to succeed

Exporting the log fails. Manually copied from the window

2022-07-04-axt1800.log.zip (3.5 KB)

There was an ISP outage on the 3rd as I recall. The AXT1800 would have been getting a valid DHCP assignment with connectivity to the gateway, but there would have been no upstream connectivity past that.

Machine has not been rebooted and can explore further if needed.